Introduction

The typical LLM use case is interpreting natural language queries from the end user. An LLM's capacity is limited to its training data. Say a user queries, "Help me with current weather in Chicago." Modern LLMs can understand this query, but they have no means of fulfilling it.

An example chat with Microsoft Phi3 model deployed locally using Ollama.

from adalflow.core.generator import Generator

from adalflow.components.model_client.ollama_client import OllamaClient

host = "127.0.0.1:11434"

prompt_template = """

<SYS>{{task_desc_str}}<SYS>

Answer the question: {{input_str}}

You:

"""

generator = Generator(

model_client = OllamaClient(host=host)

,model_kwargs = {"model":"phi3:latest"}

,template = prompt_template

,prompt_kwargs ={"task_desc_str": "You are an useful assistant."}

)

query = {"input_str":"Help me with current weather in Chicago"}

out = generator(query)

print(f"Query: {query['input_str']}\n")

print(out.data)Query: Help me with current weather in Chicago

As of my last update, I don't have real-time capabilities. To find out today's weather in Chicago or any other location you're interested in, please check a reliable source like The Weather Channel, AccuWeather, BBC Weather, or use the search function on their official website for accurate and up-to-date information from your local area!Phi3 succeded in interpreting the user request,yet handicapped with no means to complete the request.

Function calling

OpenAI defines function calling as

"Function calling allows you to connect models like gpt-4o to external tools and systems. This is useful for many things such as empowering AI assistants with capabilities, or building deep integrations between your applications and the models."

Prompts are the only way to communicate with language models. Effective prompting achieves the "Deep integration" mentioned in the above definition.

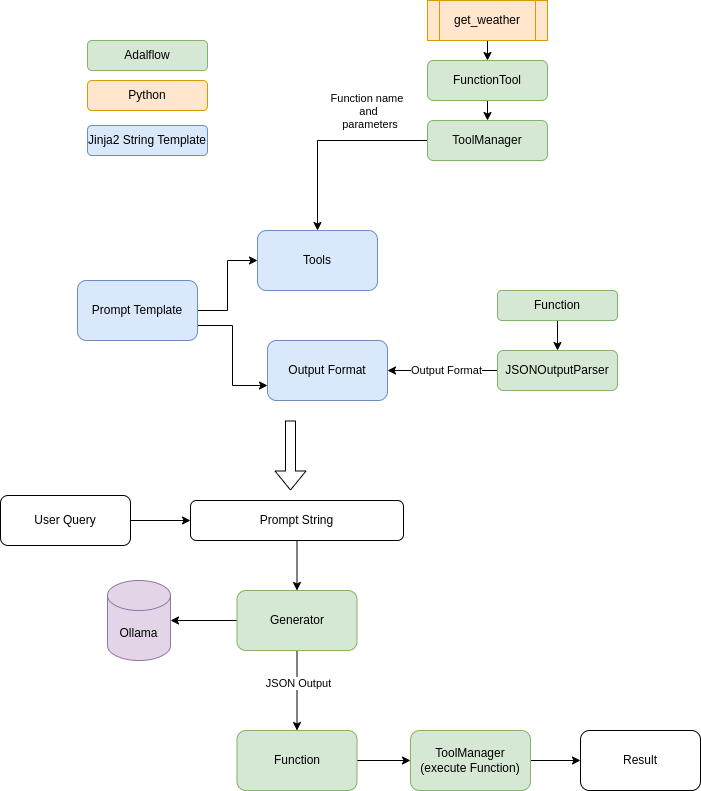

Steps involved in enabling function calling with an existing LLM.

1. Develop the required functions. If you want your LLM to work as a mini calculator, be ready with functions that can perform add, subtract, multiply, and divide.

2. Include these function definitions and corresponding parameters inside the Prompt.

3. Again, through the Prompt, inform the expected output format. Say you want LLM to return a JSON with two keys. The first key's value is the function name, and the second key's value is the list of parameters to be passed to the function.

4. The final step is to call the function with the specified parameters and return the results to the user.

Start with writing a python function to get the temperature for a given location. Using geopy library, convert the given location to latitude and longitude. Pass these Geo-coordinates to openmeteo API to retrieve the temperature.

def get_weather(location_str: str):

""" Get the current weather in a given location"""

from geopy.geocoders import Nominatim

import openmeteo_requests

import requests_cache

from retry_requests import retry

import json

temperature = None

try:

# get lat long for given location

geolocator = Nominatim(user_agent="function_calling_demo")

location = geolocator.geocode(location_str)

# Setup the Open-Meteo API client with cache and retry on error

cache_session = requests_cache.CachedSession('.cache', expire_after = 3600)

retry_session = retry(cache_session, retries = 5, backoff_factor = 0.2)

openmeteo = openmeteo_requests.Client(session = retry_session)

url = "https://api.open-meteo.com/v1/forecast"

params = {

"latitude": location.latitude,

"longitude": location.longitude,

"current": ["temperature_2m", "rain", "snowfall"],

"temperature_unit": "fahrenheit"

}

responses = openmeteo.weather_api(url, params=params)

response = responses[0]

current = response.Current()

temperature = str(round(current.Variables(0).Value(),2)) + " celcius"

except:

print(f"Unable to get weather")

return json.dumps({"location": location_str, "temperature": "Error fetching temperature"})

return json.dumps({"location": location_str, "temperature": temperature})

get_weather("delhi")

'{"location": "delhi", "temperature": "77.18 celcius"}'Next step is to provide this function definition and parameters to our prompt. Let us proceed to modify earlier prompt template to accommodate function definition.

prompt_template = """

<SYS>{{task_desc_str}}

You have the following tools available.

{% if tools %}

{# tools list #}

{% for tool in tools %}

{{loop.index}}

{{tool}}

{% endfor %}

{% endif %}

<OUTPUT_FORMAT>

{{ output_format_str }}

</OUTPUT_FORMAT>

<SYS>

Answer the question: {{input_str}}

You:

"""We add two placeholders tools and output format. For this demo, we have a single function, get_weather. However in other complex use cases more function definitions had to be passed to LLM, hence the loop for tools. The second placeholder output format defines the expected output from LLM.

FunctionTool and ToolManager

AdalFlow provides two Containers, FunctionTool and ToolManager. FunctionTool helps primarily to help describe function to LLM.

from adalflow.core.func_tool import FunctionTool

weather_tool = FunctionTool(get_weather)

# Describe the function to LLM

print(weather_tool.definition.to_json()){

"func_name": "get_weather",

"func_desc": "get_weather(location_str: str)\n Get the current weather in a given location",

"func_parameters": {

"type": "object",

"properties": {

"location_str": {

"type": "str"

}

},

"required": [

"location_str"

]

}

}Output above shows what FunctionTool generated for get_weather function. We can pass this definition to the prompt.

ToolManager is used to manage all the functions. In our case we have only one. Using tool manager, we can pass all function definitions to our prompt.

from adalflow.core.tool_manager import ToolManager

from adalflow.core import Prompt

tool_manager = ToolManager(tools=[weather_tool])

function_prompt = Prompt(

template = prompt_template

,prompt_kwargs = {"tools": tool_manager.yaml_definitions}

)

print(function_prompt(task_desc_str ="You are and intelligent assistant."))<SYS>You are and intelligent assistant.

You have the following tools available.

1

func_name: get_weather

func_desc: "get_weather(location_str: str)\n Get the current weather in a given location"

func_parameters:

type: object

properties:

location_str:

type: str

required:

- location_str

<OUTPUT_FORMAT>

None

</OUTPUT_FORMAT>

<SYS>

Answer the question: None

You:

Let us move to step 3. We expect a standard JSON output from large language model. Adalflow provides Function, a datalclass which defines a function with three attributes: name for the function name, args for positional arguments and kwargs for keyword arguments. We use Function dataclass and JsonOutputParser to get the output format needed to be passed to prompt.

Output Parser

from adalflow.components.output_parsers import JsonOutputParser

func_parser = JsonOutputParser(data_class=Function,exclude_fields=["thought", "args"])

instructions = func_parser.format_instructions()

print(instructions)Your output should be formatted as a standard JSON instance with the following schema:

```

{

"name": "The name of the function (str) (optional)",

"kwargs": "The keyword arguments of the function (Optional[Dict[str, object]]) (optional)"

}

```

-Make sure to always enclose the JSON output in triple backticks (```). Please do not add anything other than valid JSON output!

-Use double quotes for the keys and string values.

-DO NOT mistaken the "properties" and "type" in the schema as the actual fields in the JSON output.

-Follow the JSON formatting conventions.

Let us now use the output format and enrich the prompt.

print(function_prompt(task_desc_str ="You are and intelligent assistant.", output_format_str=instructions))<SYS>You are and intelligent assistant.

You have the following tools available.

1

func_name: get_weather

func_desc: "get_weather(location_str: str)\n Get the current weather in a given location"

func_parameters:

type: object

properties:

location_str:

type: str

required:

- location_str

<OUTPUT_FORMAT>

Your output should be formatted as a standard JSON instance with the following schema:

```

{

"name": "The name of the function (str) (optional)",

"kwargs": "The keyword arguments of the function (Optional[Dict[str, object]]) (optional)"

}

```

-Make sure to always enclose the JSON output in triple backticks (```). Please do not add anything other than valid JSON output!

-Use double quotes for the keys and string values.

-DO NOT mistaken the "properties" and "type" in the schema as the actual fields in the JSON output.

-Follow the JSON formatting conventions.

</OUTPUT_FORMAT>

<SYS>

Answer the question: None

You:Our prompt is ready. It includes the tools we want to expose t and output format we expect from LLM. Let us pass this to our generator.

prompt_template = """

<SYS>{{task_desc_str}}

You have the following tools available.

{% if tools %}

{# tools list #}

{% for tool in tools %}

{{loop.index}}

{{tool}}

{% endfor %}

{% endif %}

<OUTPUT_FORMAT>

{{ output_format_str }}

</OUTPUT_FORMAT>

<SYS>

Answer the question: {{input_str}}

You:

"""

prompt_kwargs = {"tools": tool_manager.yaml_definitions

,"task_desc_str": "You are and intelligent assistant."

,"output_format_str":instructions}

generator = Generator(

model_client = OllamaClient(host=host)

,model_kwargs = {"model":"phi3:latest"}

,template = prompt_template

,prompt_kwargs = prompt_kwargs

,output_processors=json_parser

)

query = {"input_str":"Help me with current weather in Chicago"}

out = generator(query)

print(f"Query: {query['input_str']}\n")

print(out.data)Query: Help me with current weather in Chicago

{'func_name': 'get_weather', 'kwargs': {'location_str': 'Chicago'}}

We pass this returned JSON from LLM back into our ToolManager to get the function executed.

executable_fn = Function(name=out.data['func_name'],kwargs = out.data['kwargs'])

final_output = tool_manager.execute_func(executable_fn)

print(final_output.output){"location": "Chicago", "temperature": "59.2 celcius"}This completes all the four steps we started with to put function calling together.